写在前面

做自动化流程的时候会遇到各种各样的验证码,图片验证码无疑是最常见的了,本文记录一下破解验证码的过程。

主要环境

Windows 10Pro 64位

PyCharm 2021.2 Community

python3.8.5 64位

tensorflow 2.5.0

keras 2.4.3

opencv-python 4.5.3.56

scikit-learn 0.24.2

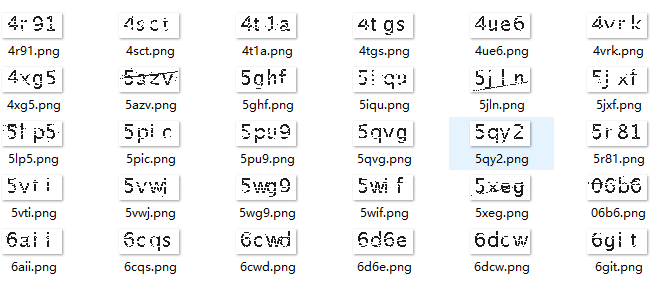

目标验证码

这里训练集(手工标注1000张)和验证集(手工标注100张)使用的都是处理过的验证码,原图因为部分原因无法提供

分割字符

主要代码如下:

# -*- coding: utf-8 -*-

"""

Created on Thu Aug 5 09:28:38 2021

@author: ljc545w

"""

import os.path

import cv2

import glob

# 目标文件夹

CAPTCHA_IMAGE_FOLDER = 'inputs'

# 分割后的保存路径

OUTPUT_FOLDER = "outputs"

# Get a list of all the captcha images we need to process

# 遍历目标文件夹,并存储为列表

captcha_image_files = glob.glob(os.path.join(CAPTCHA_IMAGE_FOLDER, "*"))

counts = {}

# 分割的大小,将60x25的图片分成四个15*25的图片

letter_image_regions = [(0, 0, 16, 25), (14, 0, 31, 25), (30, 0, 46, 25), (44, 0, 60, 25)]

# loop over the image paths

# 遍历每一张验证码

for (i, captcha_image_file) in enumerate(captcha_image_files):

print("[INFO] processing image {}/{}".format(i + 1, len(captcha_image_files)))

# 获取标注的文本

filename = os.path.basename(captcha_image_file)

captcha_correct_text = os.path.splitext(filename)[0]

# 读取图片并转换为灰度,注意图片路径不能包含中文,不然opencv会报错

image = cv2.imread(captcha_image_file)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# 将单独的字符保存为图片

for letter_bounding_box, letter_text in zip(letter_image_regions, captcha_correct_text):

# 分割的范围

x, y, w, h = letter_bounding_box

# 提取目标像素,并在边缘留出2个像素

letter_image = gray[y:h, x:w]

# 获取输出路径

save_path = os.path.join(OUTPUT_FOLDER, letter_text)

# 如果输出路径不存在,则创建

if not os.path.exists(save_path):

os.makedirs(save_path)

# 计数以完善单字符文件名,防止保存覆盖

count = counts.get(letter_text, 1)

p = os.path.join(save_path, "{}.png".format(str(count).zfill(6)))

# 写入图片

cv2.imwrite(p, letter_image)

# 计数加1

counts[letter_text] = count + 1

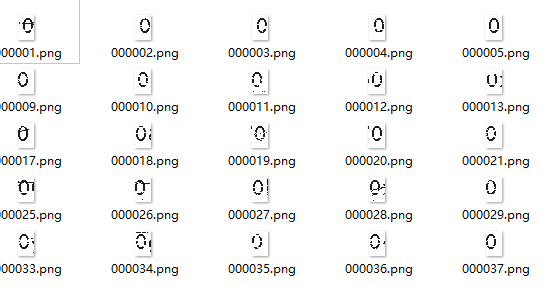

分割后的文件夹示例:

训练模型

然后就可以训练我们的模型了,使用Sequential模型来创建一个简单的网络

代码如下:

import cv2

import pickle

import os.path

import numpy as np

from imutils import paths

from sklearn.preprocessing import LabelBinarizer

from sklearn.model_selection import train_test_split

from keras.models import Sequential

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.layers.core import Flatten, Dense

from helpers import resize_to_fit

# 训练集的路径

LETTER_IMAGES_FOLDER = "outputs"

# 模型保存的名字

MODEL_FILENAME = "model.hdf5"

# 用于存储二值化后的标签对象

MODEL_LABELS_FILENAME = "labels.dat"

# 初始化

data = []

labels = []

# 遍历所有图片

for image_file in paths.list_images(LETTER_IMAGES_FOLDER):

# 读取图片并转换为灰度

image = cv2.imread(image_file)

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# 调整图片的尺寸,改为15x25(这个函数下面会写)

image = resize_to_fit(image, 15, 25)

# 为图片增加一个通道

image = np.expand_dims(image, axis=2)

# 获取标签名

label = image_file.split(os.path.sep)[-2]

# 将转换后的图片矩阵和标签添加到对应的列表

data.append(image)

labels.append(label)

# 缩放像素点

data = np.array(data, dtype="float") / 255.0

# 标签转换为矩阵

labels = np.array(labels)

# 分割训练集、测试集

(X_train, X_test, Y_train, Y_test) = train_test_split(data, labels, test_size=0.25, random_state=0)

# 标签二值化

lb = LabelBinarizer().fit(Y_train)

Y_train = lb.transform(Y_train)

Y_test = lb.transform(Y_test)

# 将二值化后的LabelBinarizer标签以二进制形式存储,方便后续调用

with open(MODEL_LABELS_FILENAME, "wb") as f:

pickle.dump(lb, f)

# 创建网络

model = Sequential()

# 第一层卷积,input_shape要和上面resize的大小保持一致,但注意宽和高是相反的!

model.add(Conv2D(20, (5, 5), padding="same", input_shape=(25, 15, 1), activation="relu"))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

# 第二层卷积

model.add(Conv2D(50, (5, 5), padding="same", activation="relu"))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

# 具有500个节点的隐藏层

model.add(Flatten())

model.add(Dense(500, activation="relu"))

# 具有36个节点的输出层(0-9+a-z,不含大写字母)

model.add(Dense(36, activation="softmax"))

# 使用keras构建tensorflow模型

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

# 训练网络,这里迭代了100次

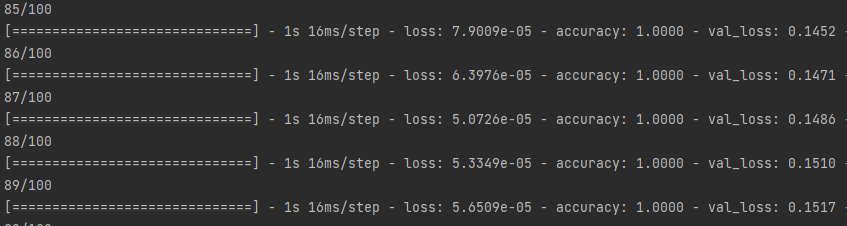

model.fit(X_train, Y_train, validation_data=(X_test, Y_test), batch_size=36, epochs=100, verbose=1)

# 保存训练好的模型

model.save(MODEL_FILENAME)

helpers.py

import imutils

import cv2

def resize_to_fit(image, width, height):

"""

:param image: 目标图片

:param width: 需要的宽度

:param height: 需要的高度

:return: 调整后的图片

"""

(h, w) = image.shape[:2]

if w > h:

image = imutils.resize(image, width=width)

else:

image = imutils.resize(image, height=height)

image = cv2.resize(image, (width, height))

return image

因为训练集不太多,就算没有GPU,训练速度也是蛮快的,结果如下:

据我猜测,第84次迭代应该已经达到最大拟合。

模型验证

接下来使用100张验证集(未参与训练)来测试一下模型的精度,代码如下:

from keras.models import load_model

from helpers import resize_to_fit

from imutils import paths

import numpy as np

import cv2

import pickle

import time

MODEL_FILENAME = "model.hdf5"

MODEL_LABELS_FILENAME = "labels.dat"

# 验证集路径

CAPTCHA_IMAGE_FOLDER = "test"

# 标签二进制文件反序列化,如果想了解相关知识,搜索pickle库即可

with open(MODEL_LABELS_FILENAME, "rb") as f:

lb = pickle.load(f)

# 加载模型

model = load_model(MODEL_FILENAME)

# 字符分割范围

region = [(0, 0, 16, 25), (14, 0, 31, 25), (30, 0, 46, 25), (44, 0, 60, 25)]

# 遍历文件夹

captcha_image_files = list(paths.list_images(CAPTCHA_IMAGE_FOLDER))

# 验证集总数

total = 100

# 随机选取total个图片进行验证,因为验证集只有100张,相当于全选

captcha_image_files = np.random.choice(captcha_image_files, size=(total,), replace=False)

# 正确识别的验证码数量

true_count = 0

# 记录开始时间

start_time = time.time()

# 循环识别

for image_file in captcha_image_files:

# 读取图片

image = cv2.imread(image_file)

# 从文件名获取标注的文本

result = image_file.split('\\')[1].split('.')[0]

# 图片转换为灰度

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# 保存单字符识别结果

predictions = []

# 逐个字符进行识别

for reg in region:

# 获取目标范围的像素

letter_image = image[:, reg[0]:reg[2]]

# 调整图片尺寸

letter_image = resize_to_fit(letter_image, 15, 25)

# 改变图片矩阵的维度,以契合输入层

letter_image = np.expand_dims(letter_image, axis=2)

letter_image = np.expand_dims(letter_image, axis=0)

# 调用模型进行识别,返回的是1x36的矩阵

prediction = model.predict(letter_image)

# 二值化标签还原

letter = lb.inverse_transform(prediction)

# 还原的字符添加到列表

predictions.append(letter[0])

# 转换为字符串

captcha_text = "".join(predictions)

# 判断识别是否正确

if captcha_text == result:

print("RESULT is: {}, CAPTCHA text is: {}, True".format(result, captcha_text))

true_count += 1

else:

print("RESULT is: {}, CAPTCHA text is: {}, False".format(result, captcha_text))

# 记录结束时间

end_time = time.time()

# 计算准确率

print("predict rate: ", true_count / total)

# 所用时间

print('time: {:.6f}s'.format(end_time - start_time))

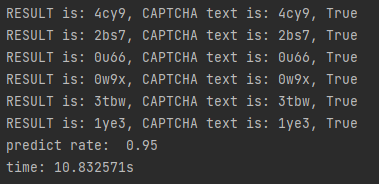

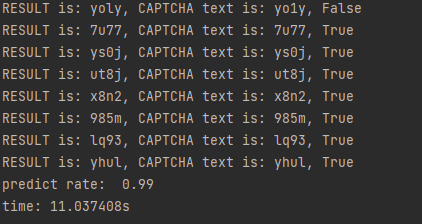

结果:

识别率达到了95%,还是很不错的,对吧~顺便再放一张训练集的识别结果:

唯一一个错误是没有分清字母l和数字1,如果按照字符的识别率,误差仅有0.25%!

资源整理

链接: 深度学习-验证码

提取码: utum